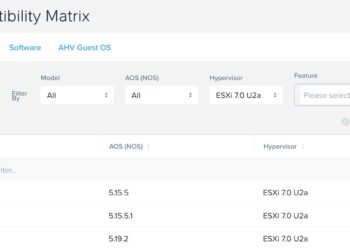

NEW! Nutanix support for VMware ESXi 7.0U2 and 7.0U2a

Nutanix has finished all of the qualification processes to certify VMware ESXi 7.0U2 and ESXi 7.0u2a for Nutanix NX G4, G5, G6 and G7 platforms. Both ESXi versions are supported with Foundation 5.0+ and LCM 2.4.1.1+. For ESXi 7.0U2 (fresh installs only), you need AOS 5.15.5 LTS or AOS 5.19.1+...