First up in this series is deploying your two Windows Server 2012 R2 VMs, which will be the SQL 2014 Always-On availability group nodes. I have a standard VM template that is built in a nearly fully automated manner with Microsoft MDT 2012 Update 1. If you are still making your VM templates by hand, or some cobbled together semi-automated scripts, then check out Microsoft MDT. It’s free, lots of info on the web, and once I started using it I never looked back. Really, it’s that good (not perfect, but good).

Remember with vSphere 5.5 you should use the web client to fully configure the VM properties, including hardware version 10. In vSphere 5.5 U2 they have allowed the C# client to modify some HW v10 properties, which is a good step forward. And like the Energizer bunny, the C# client will be there in vSphere 6.0, so all is not lost. Pick your favorite client.

SQL 2014 Blog Series

SQL 2014 Always-on AG Pt. 1: Introduction

SQL 2014 Always-on AG Pt. 2: VM Deployment

SQL 2014 Always-on AG Pt. 3: Service Accounts

SQL 2014 Always-On AG Pt 4.: Node A Install

SQL 2014 Always-on AG Pt. 5: Unattended Node B

SQL 2014 Always-on AG Pt. 6: Cluster Configuration

SQL 2014 Always-on AG Pt. 7: TempDB

SQL 2014 Always-on AG Pt. 8: Max Mem & Email

SQL 2014 Always-on AG Pt. 9: SQL Maintenance

SQL 2014 Always-On AG Pt. 10: AAG Setup

SQL 2014 Always-On AG Pt. 11: File Share Witness

Virtual Hardware

Selecting the right virtual hardware is important for the best performance. Here the major food groups are memory, compute, networking, and storage. Remember that installing SQL server inside a virtual machine should still follow many of the best practices in a physical work. Pick your best operating system, patch, make sure your storage array has sufficient IOPS, validate processor speeds, ensure physical RAM is available, etc. Virtualization doesn’t magically make these issues go away. Also remember in a virtualized world that the SQL server is sharing resources with other VMs, so don’t be a hog and overprovision vCPUs and memory, as this can negatively impact other running VMs. Be a team player!

Memory

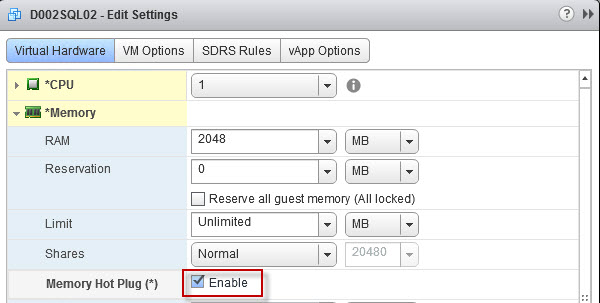

Memory configuration is very dependent on the SQL workload and your applications. In order for SQL server to use large page tables (can help performance) the VM needs at least 8GB of RAM. In general I don’t like memory reservations, but for SQL servers I DO reserve 100% amount of the RAM, so we have guaranteed memory performance. In my small lab I configured 4GB of RAM, but for production I’d do 8GB or more. In my VM template I also enable hot-add of memory, so that you have operational flexibility to add more RAM should your databases need it.

vCPUs

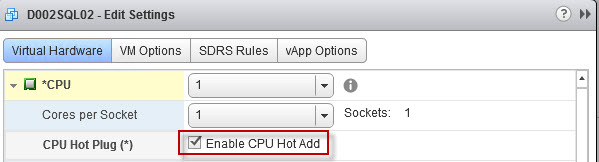

vCPUs is also very dependent on the workload, and remember not to over allocate vCPUs as it can negatively impact performance. For this lab I did one vCPU, but in production I’d do two minimum then monitor. I do like configuring hot add of RAM and CPU, so that I can dynamically add more resources with zero downtime. So let’s turn that on now, to head off future downtime to turn that feature on. Update: As two comments have mentioned, enabling hot vCPU add disables vNUMA. While this may not be a big deal on 2 vCPU VMs, for larger SQL VMs this may negatively impact performance. So for all but the smallest SQL VMs, right size your vCPUs and don’t enable CPU hot add.

Networking

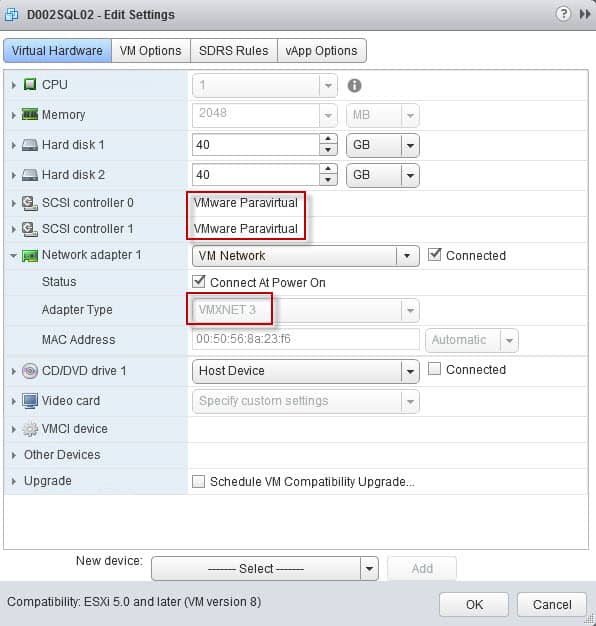

Networking is pretty straight forward, and I always, always use the VMXNET3 adapter for Windows VMs. ‘Nuff said. Just do it. This is a virtualization 101 “tip” that everyone should follow.

Storage

I’m also a pvscsi fan, and only for VDI do I use the SAS controller. Since SQL servers can have heavy IO, I always use the pvscsi controller. I have a blog article on how to inject the VMware drivers into your Windows install ISO, here. Or you can use MDT and inject them that way, which is my new preferred method. The pvscsi controller can yield a 10% improvement in IOPS and up to 30% less CPU utilization. The default queue depth for the pvscsi controller is 256. You should not need to manually adjust the pvscsi queue depth.

The boot drive size may be a slight point of contention. My philosophy is that the C drive is for the OS, patches, and the generally the swap file. It should stay lean, not grow very much, and usually be in the 40-50GB range, at most. SQL binaries should all be installed on a separate drive, so about the only thing using up C space are patches, OS logs, and OS temp files. Those do not exponentially grow, so it’s silly to overprovision the C drive.

Another important factor to keep in mind is spreading the virtual disks across multiple pvscsi controllers. If you try and shove all your I/Os through a single virtual pvscsi card you will likely be disappointed. You can have up to four pvscsi cards per VM. In an AlwaysOn cluster I distribute the multiple disks (database, log, tempDB, etc.) across all four controllers. As an example let’s use the following disks:

C: 40GB (OS Drive) – Controller 0

D: 20GB (SQL Binaries) – Controller 0

E: 10GB (Data files 1) – Controller 1

F: 10GB (Data files 2) – Controller 1

J: 10GB (TempDB) – Controller 3

L: 10GB (DB log files) – Controller 2

T: 10GB (TempDB log files) – Controller 3

S: 15GB (Backup) – Controller 0

I recommend using the KISS principal here. Look at all of the database servers you will be deploying and see if patterns emerge around the number of disks you need and their sizes. Build a template that mirrors the most common configuration. Clearly the drive sizes above need to be adjusted for YOUR environment. 100% chance they will not be the same as I’ve listed above. Right size so that only 80% of the disk capacity is used. You want some breathing room folks. Also, when formatting these disks inside of the guest OS, use a 64KB NTFS allocation file size for all drives but C and D.

Since we are using Windows Server 2012 R2, we do NOT need to worry about partition alignment. The OS automatically aligns partitions on a 1MB boundary. This was true starting in Windows Server 2008..back in the dark ages. Finally, let’s talk about disk provisioning options. You can choose from thin, thick lazy zero, and thick eager zero. There’s almost no performance difference between thin and thick lazy zero. My preference is thick eager zeroed disks, and let your array handle compacting/eliminating the zeros. This will slow down the initial disk creation process, but after they are created no more VMFS metadata updates are needed due to an expanding VMDK if you are using thin provisioning. Metadata updates aren’t a big deal today with arrays supporting VAAI, but still does add a tiny bit of overhead. I’ve seen block arrays initialize EZT disks at greater than 10GB (yes, bytes) per second, so you may not be waiting long for your fresh EZT disks.

Hardware Summary

Here’s a quick recap of the decision highlights:

- Use hardware version 10, when deploying on vSphere 5.5

- Allocate 8GB or more to the VM so you can use large page tables

- Reserve all VM memory to prevent unexpected swapping and eliminate the swap file

- Don’t over-allocate vCPUs – Use what you will actually need

- Configure hot add/plug of vCPUs and memory for future non-disruptive expansion

- Use VMXNET3 NIC

- Use four pvscsi controllers, distributing all your disks across the four controllers

- Use eager zero thick disks

- Remove unnecessary hardware like floppy drives and serial ports

Summary

Creating a hardware baseline for your SQL servers is very important. Understanding how the virtualized hardware behaves is key to extracting the best performance, and helping ensure you can meet your SLAs. Build a baseline SQL server VM template, which suffices for the majority of your SQL deployments. Keep it simple, and don’t go overboard with drives. Remember you have to manage this beast, and mirror the settings on your other AlwaysOn AG node.

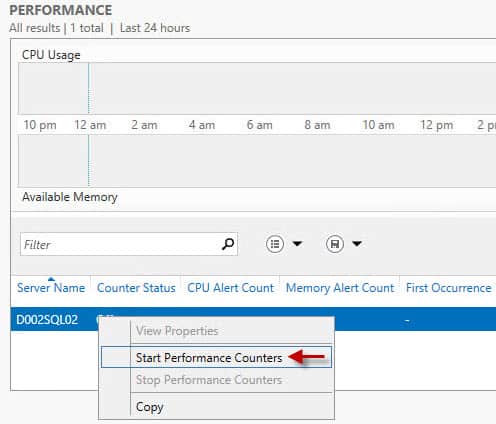

Now that you’ve configured one node of your cluster, duplicate all of the settings (or clone the VM) for your second node. Once you have two running VMs, all drives formatted, joined to the domain, patched, and ready to go then proceed to the third installment here. I’d also recommend starting the performance counters on the server, so you can monitor basic stats like CPU utilization.

Some good points, but you may want to think twice about enabling vCPU hotadd on a SQL VM, since this disables vNUMA which would kick in once you assign more than 8 vCPUs: http://kb.vmware.com/kb/2040375 http://sqlperformance.com/2013/12/system-configur…

Hi Derek,

nice article, as usual! 🙂

Enabling CPU Hot Add disable vNUMA possibilities on your VM. With 2 vCPUs it's not a big deal as vNUMA is not used (and should not be), but what if you have a big AlwaysOn AG ?

Romain

Romain,

I did not know hot CPU disables vNUMA. I'll update the article with that information. Thanks!

Hi Derek,

Is SQL alwaysOn supported on NFS datastores?

Thanks.

Randy

yes as far as I know there is no restriction of using SQL backed by VMDKs hosted on a NFS datastore.

It depends…

MS would recommend SAN but NAS is doable as long it guarantees data write ordering and write-through. See http://support.microsoft.com/kb/304261

Other info at http://kb.vmware.com/selfservice/microsites/searc…

I would apply KISS principle here…

Do you need microsoft cluster services for an sql 2014 always on config? If so then the official statement is that this isn't supported on nfs. Can you clarify please?

Thanks,

Randy

No for an AlwaysOn Availability Group you do NOT need Microsoft clustering services. As you will see later in the series, the AG configuration is totally within SQL and requires no cluster configuration.

I've checked out MDT, but I've yet to see any tutorials on using it in conjunction with vCenter (templates) for OS deployment. Can you share any references/guides you used? Great blog post idea! 😉 I can't find anything on this topic (MDT & VMware) and my hair is almost fully gray. Even a quick run down on the major steps would be helpful. I'm manually creating my templates, and then converting back to VM to update. Not sure what the better way is.

Ken, I had to use a number of resources to build my MDT server and fully customize it. It was a lot of trial and error, and reading. Unfortunately to do some advanced features like detecting VMware VMs, takes some poking around and good google skills. It's been so long since I built my MDT server that I don't remember the resources I used. There's nothing really that special about using MDT with VMware. Like I said, I did have some logic to detect VMware VMs and automatically install VMware tools. But beyond that, MDT works just like any other… Read more »

Thanks for the quick response. I'm trying to educate myself and will go through the process. I think my Google-Fu is pretty good. There is one thing I'm trying to grasp though. You build the image in Deployment Workbench, but you still create the VM as a template? Meaning, you still deploy new VMs in vCenter using the templates correct?

The process I used was as follows: I had a MDT job which was kicked off via a MDT ISO image, which installed the OS, patches, and applications. 100% automated. I then had a separate capture job which created a new WIM out of my customized image (apps, patches, etc.). I then used that new WIM via another MDT job to create a VM template. The second deployment job just did a Windows update, so that my new VM templates would be up to par in terms of patching. Basically a two step process. I could also use the captured… Read more »

I’ve built many SQL AAG’s by following your guidelines in this series. Please don’t ever delete this blog! Thank you! Lol