Twitter: #VAPP5613, Alex Fontana (VMware)

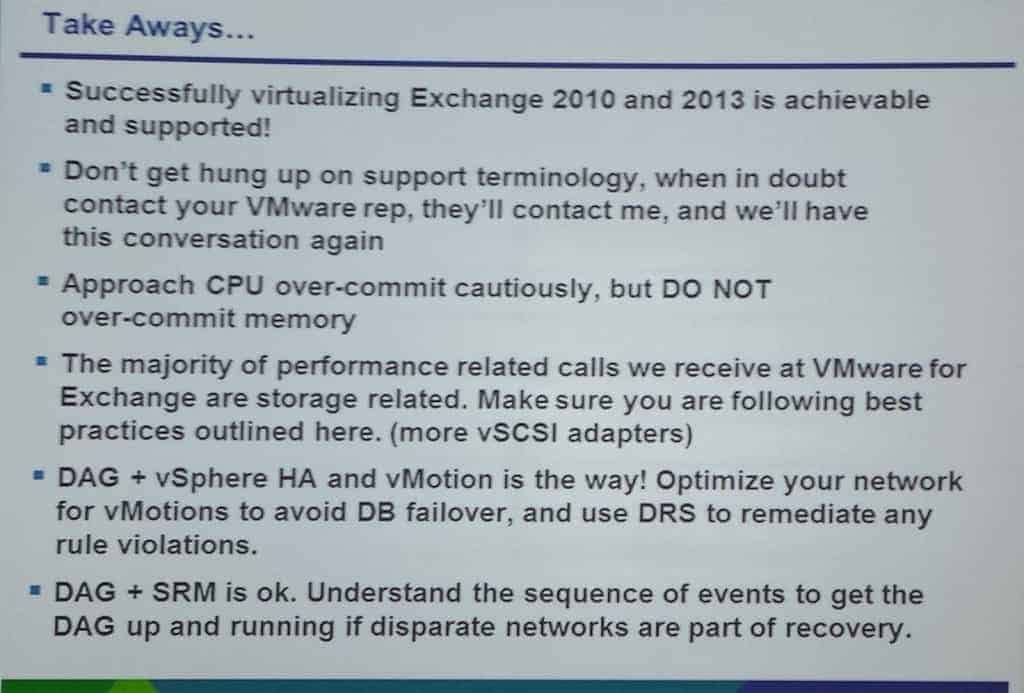

This session was skillfully presented and was jam packed with Exchange on VMware best practices for architects and Exchange administrators. Can you use Exchange VMDKs on NFS storage? Can you use vSphere HA and DRS? How can you avoid DAG failover with vMotion? What’s the number one cause of Exchange performance problems? All of these questions and more were answered in this session. If you just think a “click next” install of Exchange is adequate for an enterprise deployment then you need to find a new job. Period.

Agenda

- Exchange on VMware vSphere overview

- VMware vSphere Best Practices

- Availability and Recovery Options

- Q&A

Continued Trend Towards Virtualization

- Move to 64-bit architecture

- 2013 has 50% I/O reduction from 2010

- Rewritten store process

- Full virtualization support at RTM for Exchange 2013

Support Considerations

- You can virtualize all roles

- You can use DAGs and vSphere HA and vMotion

- Fibre Channel, FCoE and iSCSI (native and in-guest)

- What is NOT supported? VMDKs on NFS, thin disks, VM snapshots

Best Practices for vCPUs

- CPU over-commitment is possible and supported but approach conservatively

- Enable hyper-threading at the host level and VM (HT sharing: Any)

- Enable non-uniform memory access. Exchange is not NUMA-aware but ESXi is and will schedule SMP VM vCPUs onto a single NUMA node

- Size the VM to fit within a NUMA node – E.g. if the NUMA node is 8 cores, keep the VM at or less than 8 vCPUs

- Use vSockets to assign vCPUs and leave “cores per socket” at 1

- What about vNUMA in vSphere 5.0? Does not apply to Exchange since it is not NUMA aware

CPU Over-Commitment

- Allocating 2 vCPUs to every physical core is supported, but don’t do it. Keep 1:1 until a steady workload is achieved

- 1 physical core = 2400 Megacycles = 375 users at 100% utilization

- 2 vCPU VM to 1 core = 1200 megacycles per VM = 187 users per VM @ 100% utilization

Best Practices for Virtual Memory

- No memory over-commitment. None. Zero.

- Do not disable the balloon driver

- If you can’t guarantee memory then use reservations

Storage Best Practices

- Use multiple vSCSI adapters

- Use Eager thick zeroed virtual disks

- Use 64KB allocation unit size when formatting NTFS

- Follow storage vendor recommendations for path policy

- Set power policy to high performance

- Don’t confuse DAG and MSCS when it comes to storage requirements

- Microsoft does NOT support VMDKs on NFS storage for any Exchange data including OS and binaries. See their full virtualization support statement here.

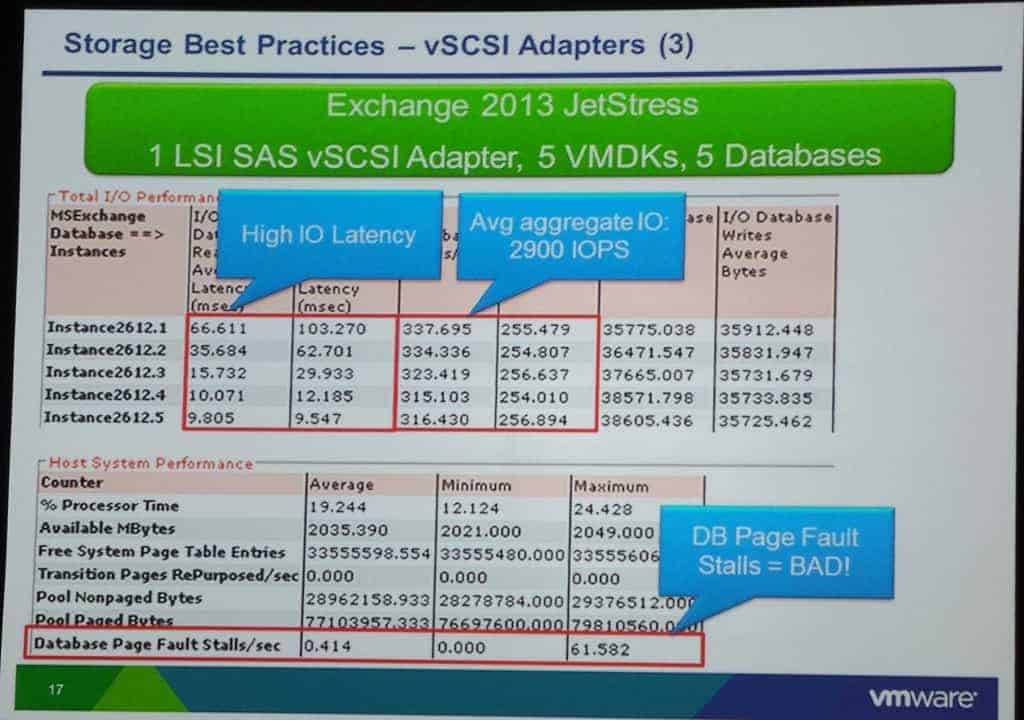

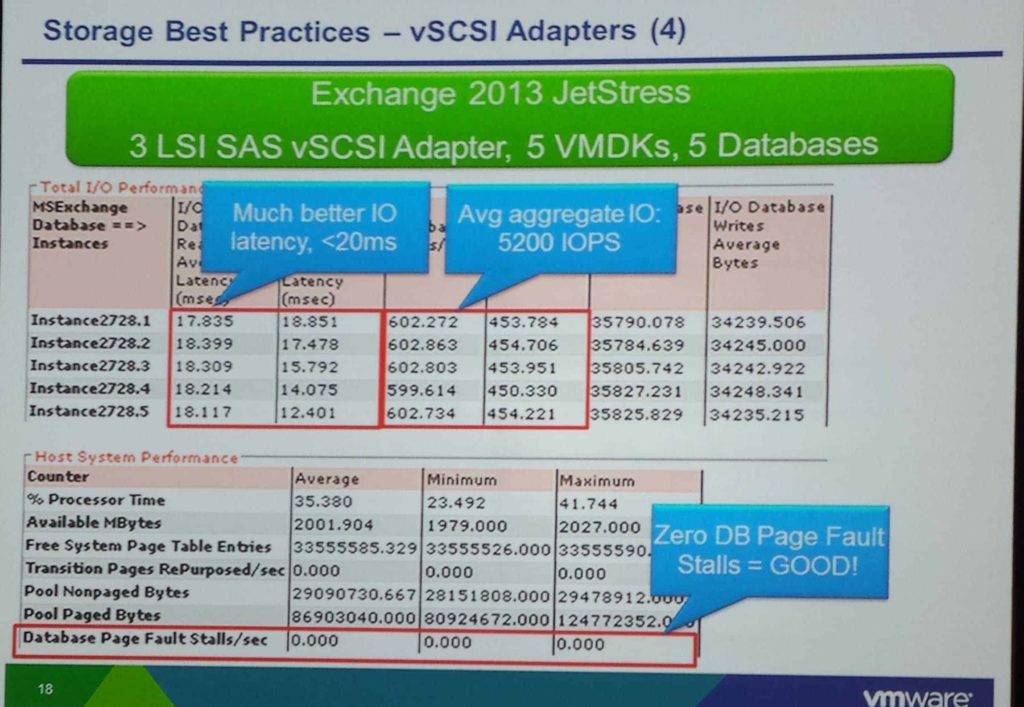

Why multiple vSCSI adapters?

- Avoid inducing queue depth saturation within the guest OS

- Queue depth is 32 for LSI, 64 for PVSCSI

- Add all four SCSI controllers to the VM

- Spread disks across all four controllers

In the two charts below you can see the result of the testing when using 1 vSCSI adapter vice four. When using just one adapter the performance was unacceptable, and the database was stalling. By just changing the distribution of the VMDKs across multiple vSCSI adapters performance vastly increased and there were no stalls.

When to use RDMs?

- Don’t do RDMs – no performance gain

- Capacity is not a problem with vSphere 5.5 – 62TB VMDKs

- Backup solution may require RDMs if hardware array snapshots needed for VSS

- Consider – Large Exchange deployments may use a lot of LUNs and ESXi hosts are limited to 255 LUNs (per cluster effectively)

What about NFS and In-Guest iSCSI?

- NFS – Explicitly not supported for Exchange data by Microsoft

- In-guest iSCSI – Supported for DAG storage

Networking Best Practices

- Use vMotion to use multiple NICs

- Use VMXNET3 NIC

- Allocate multiple NICs to participate in the DAG

- Can use standard or distributed virtual switch

Avoid Database Failover during vSphere Motion

- Enable jumbo frames on all vmkernel ports to reduce frames generated – helped A LOT

- Modify cluster heartbeat setting to 2000ms (samesubnetdelay)

- Always dedicate vSphere vMotion interfaces

High Availability with vSphere HA

- App HA in vSphere 5.5 can monitor/restart Exchange services

- vSphere HA allows DAG to maintain protection failure

- Supports vSphere vMotion and DRS

DAG Recommendations

- One DAG member per host, If multiple DAGs, those can be co-located on same host

- Create an anti-affinity rule for each DAG

- Enable DRS fully automated mode

- HA will evaluate DRS rules in vSphere 5.5

vCenter Site Recovery Manager + DAG

- Fully supported

- Showed a scripted workflow that fails over the DAG

And finally the key take aways from the session..