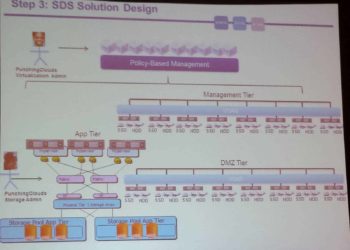

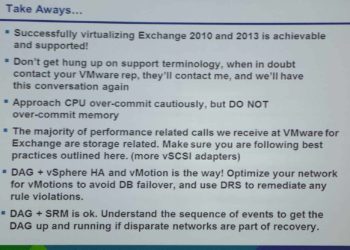

VMworld 2013: Software Defined Storage the VCDX Way

This was a great "put your architecture cap on" session by two well known VCDX's, Wade Holmes and Rawlinson Rivera. Software defined <insert virtualization food group here> is all the rage these days. Be it SDN (networking), SDS (storage), SDDC (datacenter) or software defined people. Well maybe not quite at the people stage but...