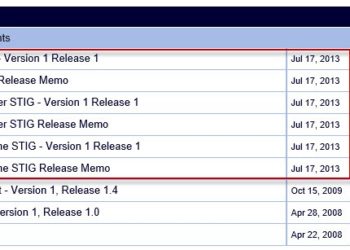

DISA VMware vSphere 5 STIG Released

Hot off the press DISA has released the VMware vSphere 5 STIG, which includes vCenter, ESXi and VM components. For those of you familiar with U.S. Government IT systems, you've probably heard of the DISA STIGs. STIGs are Security Technical Implementation Guides, which set the baseline for a variety of operating systems, network...