Home Assistant: iOS Focus Mode Automation Triggers

If you are a Home Assistant user and would like to trigger Home Assistant automations based on iOS Focus Mode changes, this post is for you. In iOS 18 and earlier all focus modes except Sleep can use built-in personal automations to trigger Home Assistant actions based on the Focusing being turned on or off. However, for some reason Apple treated the Sleep focus mode in iOS 18 and earlier different, frustrating many users. Thankfully iOS 26 beta has remedied this...

Read moreDigital Privacy Decoded: Simple Ways to Secure Your Information

In today's interconnected world, safeguarding your personal information is more crucial than ever. With telecommunications companies collecting extensive data about your communication habits, it's essential to understand how this information is used and how you can protect your privacy. From disabling Customer Proprietary Network Information (CPNI) to securing your Social Security Number and managing your credit, there are numerous strategies to enhance your digital security.This guide delves into practical steps you can take to control your data, prevent unauthorized access,...

Read morePart 2: Ruckus Unleashed (200.18+) Best Practices Guide

This is Part 2 of 2 of the 2025 version of the Ruckus Unleashed home Wi-Fi Best Practices Guide. This refreshed guide is based on the 200.18 (and later) version of Ruckus Unleashed, which received a completely new UI. If you are using Ruckus Unleashed 200.17 or earlier, check the Related Posts section below for links to that Best Practices guide. In Part 1 we configured a number of settings including system time, country code, email alerting, radio configuration, and more. Part...

Read morePart 1: Ruckus Unleashed (200.18+) Best Practices Guide

Welcome to my 2025 updated unofficial Ruckus Unleashed Best Practices Guide for enterprise-grade home Wi-Fi. This series has been completely redone to support Ruckus Unleashed 200.18 and newer. Unleashed 200.18 and later feature an entirely new UI/UX that mirrors that of their paid Ruckus One controller. IMHO, this is a huge advancement for Unleashed users, as the UI is much more modern and easy to navigate. If you have not yet upgraded to Unleashed 200.18 or later, you can refer back...

Read more3-2-1 Go: A Step-by-Step Guide to Implementing Foolproof Backups

With the large amount of digital data that people consider important, safeguarding your critical data against catastrophic loss is more important than ever. Whether it's a natural disaster, hardware failure, or a cyberattack, the potential for data loss is large. Protecting precious photos, financial documents and other personal or business data is extremely important. Implementing a robust disaster recovery plan with a layered backup strategy is essential to ensure your information remains secure and recoverable. In this blog post I cover...

Read moreGeek Chic Eyewear: My Optometrist Saga, Eyewear Collection & More

Welcome to the fourth installment of the "Geek Chic Eyewear" series, where I share my personal journey through the world of eyewear and optometry. This series aims to provide a comprehensive look into the technical aspects of eyewear, focusing on frame selection and lens materials. Having navigated the complexities of vision correction—from LASIK in my twenties to dealing with presbyopia in my forties—I've gained insights that I hope will assist you in making informed decisions about your eye health and...

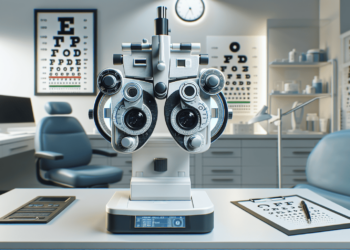

Read moreGeek Chic Eyewear: Optometry Tools, Eye Health and Insurance

This is part 3 of the "Geek Chic Eyewear" series and it covers the role of advanced optometry equipment, eye health, eye insurance tips and more. The post first examines Zeiss tools like the i.Profiler Plus, VISUPHOR 500, VISUFIT 1000, and i.Terminal 2. These devices enhance vision assessments: the i.Profiler Plus uses wavefront technology for accurate prescriptions, while the VISUPHOR 500 digitizes the subjective refraction process. The VISUFIT 1000 and i.Terminal 2 provide detailed 3D measurements to ensure proper eyewear...

Read moreGeek Chic Eyewear: Understanding Lens Materials and Designs

In Part 2 of my "Geek Chic Eyewear" series I will delve into the various len materials and designs. While frames (covered in Part 1) play a significant role in the aesthetics of your eyewear, the quality of the lenses is paramount. Poor-quality lenses can severely detract from the overall functionality and satisfaction of your glasses, regardless of how stylish the frames may be.This post will explore topics such as single vision lenses, progressive lenses, lens tints, lens coatings, and computer glasses....

Read moreGeek Chic Eyewear: A Comprehensive Guide to Frame Selection

Welcome to my "Geek Chic Eyewear" series for finding the perfect pair of glasses. The series is designed to offer educational insight from a technology standpoint. Even if you don't currently require vision correction, it's likely that you will as you reach into your 40s. Yes, those "dreaded" reading glasses that "old" people need. Or maybe you just want quality non-prescription sunglasses. Either way, I've got you covered! Although the topic of eyewear diverges from my usual smart home and other...

Read moreEssential Tips for a Stable Matter over Thread Network

Matter over Thread (MoT) is a recent addition to the smart home ecosystem. Unlike more established protocols such as ZigBee and Z-Wave, MoT is still in its early stages and may present some challenges. This post will outline several key areas to consider for maintaining optimal Thread network stability:Matter Multi-Admin GotchasThread and Wi-Fi RF ContentionThread Border Router UpdatesSystematic Network TroubleshootingMatter Device FirmwareBy adhering to the recommendations in this post, I have successfully scaled my MoT network from 25 to nearly...

Read more